Imagine a world without lightning-fast internet, complex simulations, or even the ability to binge-watch your favorite shows. This world exists without the driving force behind modern technology: computing power. From the smallest smartphone to the largest supercomputer, computing power dictates what we can achieve with technology. Understanding its intricacies, evolution, and future is crucial in today’s increasingly digital age.

What is Computing Power?

Defining Computing Power

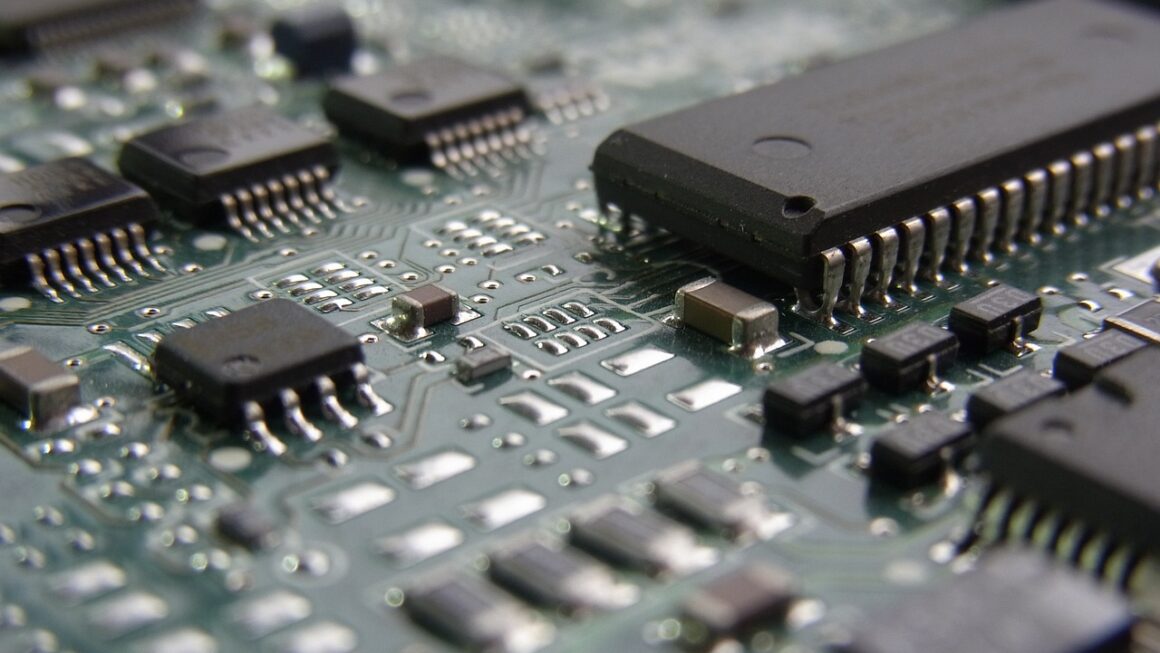

Computing power, at its core, refers to the ability of a computer to process data and perform calculations. It’s essentially the brainpower of a machine, determining how quickly and efficiently it can execute instructions. This power is derived from a combination of hardware components, particularly the central processing unit (CPU) and the graphics processing unit (GPU), alongside software optimization.

Measuring Computing Power

Several metrics are used to measure computing power, each providing a different perspective on performance. Key metrics include:

- Clock Speed: Measured in Hertz (Hz), specifically Gigahertz (GHz), this represents the number of cycles a CPU can perform per second. A higher clock speed generally indicates faster processing. However, it’s not the only factor, as architecture and instruction sets also play significant roles.

- Instructions Per Second (IPS): A more direct measure of computational throughput. Variants include Millions of Instructions Per Second (MIPS), Billions of Instructions Per Second (BIPS), and Trillions of Instructions Per Second (TIPS).

- Floating Point Operations Per Second (FLOPS): This is particularly relevant for scientific computing and tasks involving complex mathematical calculations. MegaFLOPS (MFLOPS), GigaFLOPS (GFLOPS), TeraFLOPS (TFLOPS), PetaFLOPS (PFLOPS), and even ExaFLOPS (EFLOPS) are used to quantify this. For context, the world’s fastest supercomputer, Frontier, achieves exascale computing, surpassing 1 exaflop (1 quintillion floating-point operations per second).

- Benchmarks: Standardized tests designed to evaluate the performance of a computer under specific workloads. Popular benchmarks include Geekbench, Cinebench, and SPEC CPU.

Factors Affecting Computing Power

Several factors contribute to a computer’s overall computing power:

- CPU Architecture: The design and organization of the CPU, including the number of cores, cache size, and instruction set architecture (ISA).

- GPU Capabilities: Particularly important for graphics-intensive tasks such as gaming, video editing, and machine learning.

- RAM (Random Access Memory): The amount of RAM available impacts the system’s ability to handle multiple tasks simultaneously and store frequently accessed data.

- Storage Speed: Solid-state drives (SSDs) offer significantly faster read and write speeds compared to traditional hard disk drives (HDDs), resulting in quicker loading times and improved overall responsiveness.

- Software Optimization: Efficiently written code and optimized algorithms can drastically improve performance, even on less powerful hardware.

The Evolution of Computing Power

From Vacuum Tubes to Transistors

The history of computing power is a fascinating journey from bulky, power-hungry vacuum tubes to the tiny, powerful microchips we use today. Early computers like ENIAC (Electronic Numerical Integrator and Computer) relied on thousands of vacuum tubes, consuming vast amounts of electricity and generating significant heat. The invention of the transistor in the late 1940s revolutionized the field, leading to smaller, more reliable, and energy-efficient computers.

The Rise of Microprocessors

The development of the microprocessor in the early 1970s marked another significant milestone. These integrated circuits packed millions of transistors onto a single chip, leading to exponential increases in computing power and enabling the creation of personal computers. Intel’s 4004, released in 1971, is widely considered the first commercially available microprocessor.

Moore’s Law

Moore’s Law, an observation made by Gordon Moore, co-founder of Intel, stated that the number of transistors on a microchip would double approximately every two years, leading to a corresponding increase in computing power. While the pace of Moore’s Law has slowed in recent years, it has been a driving force in the relentless advancement of technology for decades.

The Era of Parallel Computing

As limitations in increasing clock speeds became apparent, parallel computing emerged as a key strategy for boosting computing power. This involves using multiple processors or cores to work on different parts of a problem simultaneously. Multi-core processors are now ubiquitous in everything from smartphones to servers, enabling significant performance gains in many applications.

Applications of Computing Power

Scientific Research

High-performance computing (HPC) is indispensable for scientific research, enabling researchers to tackle complex problems in fields such as:

- Climate Modeling: Simulating Earth’s climate system to predict future climate change scenarios.

- Drug Discovery: Screening potential drug candidates and simulating their interactions with biological targets.

- Astrophysics: Modeling the formation and evolution of galaxies and stars.

- Fluid Dynamics: Simulating the flow of fluids, such as air around an airplane wing.

Artificial Intelligence and Machine Learning

AI and machine learning (ML) are heavily reliant on computing power, particularly for training complex models. Deep learning, a subset of ML, involves training artificial neural networks with many layers, requiring vast amounts of data and processing power. GPUs are often used to accelerate the training process.

Data Analytics and Big Data

The ability to process and analyze massive datasets is crucial for businesses and organizations. Computing power is essential for tasks such as:

- Customer Relationship Management (CRM): Analyzing customer data to improve marketing and sales strategies.

- Fraud Detection: Identifying fraudulent transactions in real-time.

- Risk Management: Assessing and mitigating financial risks.

Entertainment and Gaming

The entertainment industry relies heavily on computing power for creating realistic graphics, immersive virtual reality experiences, and complex game simulations. Modern video games require powerful GPUs and CPUs to deliver high frame rates and detailed visuals.

Everyday Applications

Computing power is also essential for everyday applications such as:

- Web Browsing: Rendering complex web pages with interactive elements.

- Video Streaming: Decoding and displaying high-resolution video content.

- Mobile Applications: Running complex apps on smartphones and tablets.

- Office Productivity: Creating and editing documents, spreadsheets, and presentations.

The Future of Computing Power

Quantum Computing

Quantum computing represents a paradigm shift in computing technology, leveraging the principles of quantum mechanics to solve problems that are intractable for classical computers. While still in its early stages of development, quantum computers have the potential to revolutionize fields such as cryptography, drug discovery, and materials science.

Neuromorphic Computing

Neuromorphic computing aims to mimic the structure and function of the human brain, creating chips that are more energy-efficient and better suited for certain types of AI tasks. These chips use spiking neural networks to process information, offering potential advantages in areas such as image recognition and natural language processing.

Exascale Computing and Beyond

The race to achieve exascale computing has already been won, with several supercomputers now capable of performing over a quintillion calculations per second. The next frontier is zettascale computing, which would represent another thousandfold increase in computing power.

Edge Computing

Edge computing involves processing data closer to the source, rather than relying solely on centralized data centers. This can reduce latency, improve bandwidth efficiency, and enhance privacy. Edge computing is particularly relevant for applications such as autonomous vehicles, smart cities, and industrial automation.

Tips for Optimizing Computing Power Usage

Software Optimization

Writing efficient code and using optimized algorithms can significantly improve performance. Profiling tools can help identify bottlenecks in your code.

- Choose the right data structures and algorithms for the task.

- Minimize memory allocation and deallocation.

- Use parallel processing techniques where appropriate.

- Keep your software up-to-date.

Hardware Upgrades

Upgrading your hardware can provide a significant boost in computing power. Consider upgrading your:

- CPU: A faster CPU can improve overall performance.

- GPU: A more powerful GPU is essential for graphics-intensive tasks.

- RAM: Increasing the amount of RAM can improve multitasking and reduce slowdowns.

- Storage: Switching to an SSD can drastically improve loading times and responsiveness.

Resource Management

Proper resource management is crucial for maximizing computing power utilization.

- Close unnecessary applications and processes.

- Monitor CPU and memory usage to identify resource-intensive tasks.

- Use task manager or activity monitor to manage running processes.

- Consider using virtualization or containerization to optimize resource allocation.

Conclusion

Computing power is the engine that drives innovation across numerous fields. From scientific discovery to artificial intelligence and everyday applications, its impact is undeniable. Understanding the factors that influence computing power, its historical evolution, and future trends is essential for navigating the ever-evolving technological landscape. By optimizing software, upgrading hardware, and managing resources effectively, we can harness the full potential of computing power to solve complex problems and create a better future.

Read our previous article: Beyond Real-Time: Crafting Empathetic Async Workflows

For more details, visit Wikipedia.