Unlocking the potential of the digital age hinges on one crucial element: computing power. From the smartphones in our pockets to the massive data centers powering global internet services, the ability to process information quickly and efficiently is fundamental to modern life. Understanding the intricacies of computing power allows us to appreciate the incredible advancements we’ve made and anticipate the even more remarkable innovations on the horizon.

What is Computing Power?

Definition and Core Components

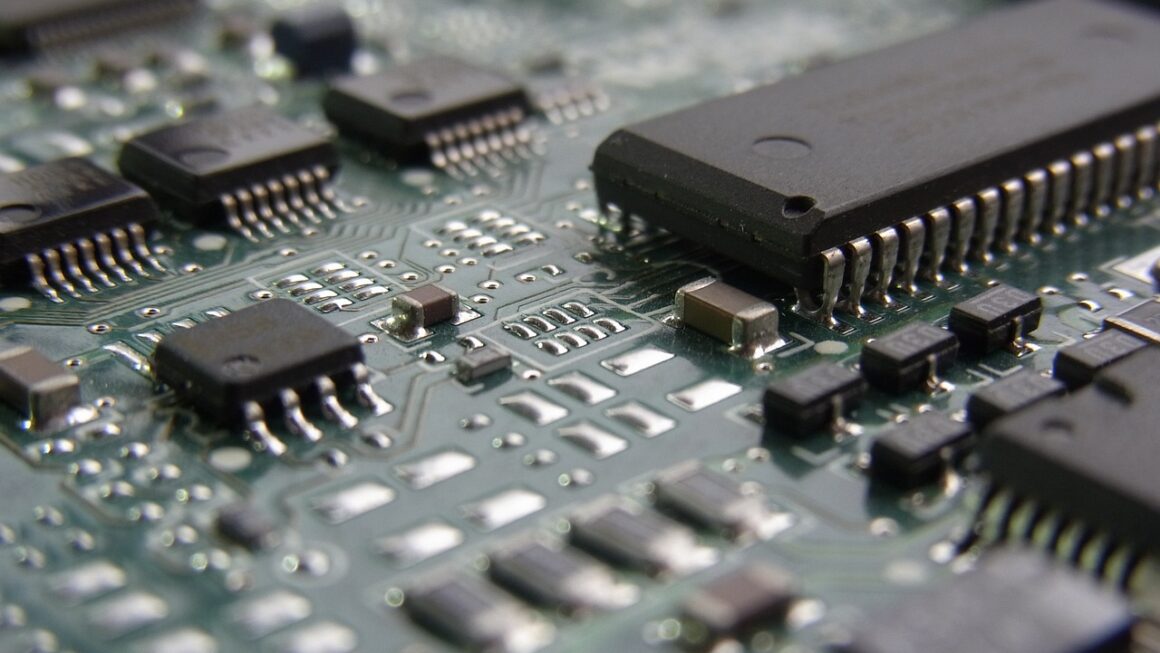

Computing power, at its core, refers to the ability of a computer or processing unit to execute instructions and perform calculations. This capacity is determined by several key components working in harmony:

- Central Processing Unit (CPU): The “brain” of the computer, responsible for executing instructions. Its speed, measured in Hertz (Hz), indicates how many instructions it can process per second. Higher clock speeds generally mean faster performance.

- Graphics Processing Unit (GPU): Initially designed for graphics rendering, GPUs have become powerful processors for parallel computing tasks, especially in areas like artificial intelligence and machine learning.

- Memory (RAM): Random Access Memory provides temporary storage for data and instructions that the CPU or GPU needs to access quickly. More RAM allows the computer to handle more tasks simultaneously without slowing down.

- Storage (SSD/HDD): Solid State Drives (SSDs) and Hard Disk Drives (HDDs) store data persistently. SSDs are generally much faster than HDDs, leading to quicker boot times and application loading.

Measuring Computing Power

Several metrics are used to quantify computing power, each with its own strengths and limitations:

- Clock Speed (GHz): Primarily relevant for CPUs, it indicates the number of instructions a processor can execute per second. However, clock speed alone is not a complete measure, as other factors like architecture and cache size play a significant role.

- Instructions Per Cycle (IPC): Measures how many instructions a CPU can execute in a single clock cycle. A higher IPC indicates a more efficient architecture.

- FLOPS (Floating-Point Operations Per Second): A common metric for measuring the performance of scientific and high-performance computing systems. It indicates the number of floating-point calculations a processor can perform per second.

- Benchmarks: Standardized tests that measure the performance of a computer system under specific workloads. Popular benchmarks include Geekbench, Cinebench, and PassMark.

- Actionable Takeaway: When evaluating computing power, consider multiple metrics rather than relying on a single number. Look at clock speed, IPC, FLOPS (if relevant to your use case), and benchmark scores to get a holistic view of performance.

Factors Influencing Computing Power

Hardware Limitations and Advancements

The fundamental building blocks of computing – transistors – are subject to physical limitations. Moore’s Law, which predicted the doubling of transistors on a microchip every two years, has slowed down in recent years as we approach the limits of miniaturization. However, innovations in materials science, chip architecture, and manufacturing processes are continuously pushing the boundaries of what’s possible.

- Chip Architecture: Modern CPUs and GPUs use complex architectures, such as multi-core designs, to improve performance. Multi-core processors can execute multiple instructions simultaneously, significantly increasing throughput.

- Cache Memory: Small, fast memory located on the processor chip that stores frequently accessed data. A larger and faster cache can significantly reduce the time it takes to retrieve data.

- Manufacturing Processes: Advances in manufacturing processes, such as using Extreme Ultraviolet (EUV) lithography, allow for the creation of smaller and more densely packed transistors, leading to increased performance and power efficiency.

Software Optimization and Efficiency

While hardware is essential, software plays a crucial role in maximizing computing power. Optimized algorithms and efficient code can significantly reduce the resources required to perform a task.

- Algorithm Design: Choosing the right algorithm can have a dramatic impact on performance. For example, using a sorting algorithm with a time complexity of O(n log n) will be much faster than one with a complexity of O(n^2) for large datasets.

- Parallel Processing: Utilizing multiple cores or processors to perform tasks simultaneously. This requires carefully designing software to distribute the workload effectively.

- Compiler Optimization: Compilers translate high-level programming languages into machine code that the processor can execute. Optimizing compilers can generate more efficient code, leading to faster execution times.

- Actionable Takeaway: Don’t overlook the importance of software optimization. Even the most powerful hardware can be bottlenecked by inefficient code. Consider profiling your code to identify areas for improvement and optimize algorithms for maximum performance.

The Role of Computing Power in Modern Applications

Artificial Intelligence and Machine Learning

AI and machine learning are heavily reliant on computing power, particularly for training complex models. The ability to process massive datasets and perform millions of calculations per second is essential for developing accurate and effective AI systems.

- Training Deep Learning Models: Deep learning models, such as neural networks, require vast amounts of data and computational resources to train. GPUs are often used to accelerate the training process due to their parallel processing capabilities.

- Real-time Inference: Once trained, AI models can be used to make predictions in real-time. This requires significant computing power, especially for applications like autonomous driving and natural language processing.

- Edge Computing: Bringing computing power closer to the data source, such as in IoT devices or autonomous vehicles. This reduces latency and improves responsiveness.

Scientific Research and Simulation

Scientific research often involves complex simulations and data analysis, which require immense computing power. From simulating climate change to modeling the behavior of molecules, high-performance computing (HPC) systems are essential tools for scientists.

- Climate Modeling: Simulating the Earth’s climate requires solving complex equations that take into account various factors, such as temperature, pressure, and wind patterns.

- Drug Discovery: Modeling the interactions between drugs and biological molecules to identify potential new treatments.

- Particle Physics: Analyzing data from particle accelerators to understand the fundamental building blocks of the universe.

Business and Finance

Computing power plays a critical role in various business and financial applications, including data analysis, fraud detection, and high-frequency trading.

- Big Data Analytics: Analyzing large datasets to identify trends and patterns that can inform business decisions.

- Fraud Detection: Using machine learning algorithms to detect fraudulent transactions in real-time.

- High-Frequency Trading: Executing trades at extremely high speeds using sophisticated algorithms.

- Actionable Takeaway: Identify the specific computing power requirements of your application. Consider factors such as the size of the data, the complexity of the algorithms, and the required latency. Then, choose hardware and software solutions that meet those requirements.

Future Trends in Computing Power

Quantum Computing

Quantum computing leverages the principles of quantum mechanics to perform calculations that are impossible for classical computers. While still in its early stages of development, quantum computing has the potential to revolutionize fields such as cryptography, drug discovery, and materials science.

- Quantum Bits (Qubits): Unlike classical bits, which can be either 0 or 1, qubits can exist in a superposition of both states simultaneously. This allows quantum computers to perform calculations on multiple possibilities at once.

- Entanglement: A phenomenon in which two or more qubits become linked together, even when separated by large distances. This allows quantum computers to perform complex calculations that are impossible for classical computers.

- Potential Applications: Quantum computing has the potential to solve problems that are currently intractable for classical computers, such as factoring large numbers (which is the basis of modern cryptography) and simulating the behavior of molecules.

Neuromorphic Computing

Neuromorphic computing aims to mimic the structure and function of the human brain. By using artificial neurons and synapses, neuromorphic chips can process information in a more energy-efficient and parallel manner than traditional computers.

- Artificial Neurons and Synapses: Neuromorphic chips use artificial neurons and synapses to process information in a way that is similar to the human brain.

- Event-Driven Processing: Neuromorphic chips only process information when there is a change in the input signal. This reduces power consumption and improves efficiency.

- Potential Applications: Neuromorphic computing has the potential to revolutionize fields such as robotics, image recognition, and natural language processing.

Edge Computing Evolution

Edge computing continues to expand, driven by the increasing demand for low-latency applications and the proliferation of IoT devices. Future advancements will focus on developing more powerful and energy-efficient edge devices, as well as improving the security and management of edge networks.

- AI at the Edge: Running AI models on edge devices to enable real-time decision-making without relying on cloud connectivity.

- Federated Learning: Training AI models on decentralized data sources while preserving privacy.

- Secure Edge Computing: Implementing robust security measures to protect edge devices and data from cyber threats.

- Actionable Takeaway: Stay informed about emerging trends in computing power. While quantum and neuromorphic computing are still in their early stages, they have the potential to disrupt many industries in the future. Consider how these technologies might impact your business and start exploring potential use cases.

Conclusion

Computing power is a fundamental enabler of innovation across various sectors, from artificial intelligence to scientific research and business analytics. By understanding the core components, measurement metrics, and influencing factors, we can better appreciate the advancements we’ve made and anticipate the exciting possibilities that lie ahead. As hardware and software continue to evolve, and as emerging technologies like quantum and neuromorphic computing become more mature, the potential for computing power to transform our world is virtually limitless. Embracing these advancements and optimizing our use of computing resources will be key to unlocking the full potential of the digital age.

Read our previous article: Beyond Zoom: Mastering Immersive Online Meeting Design